Earlier this week, Truth Social tipped off the FBI about threats made by a user of the platform to kill President Joe Biden. Though it is unclear if the individual would have actually taken any action, the FBI took it quite seriously—and Craig Deleeuw Robertson was killed on Wednesday following the FBI’s efforts to arrest him.

What is most notable is that such a threat was reported by the social media company, and that law enforcement responded. In this case, it did involve the president, but it brings into question why other threats seemed to be ignored.

Portents On Social Media

Following mass shootings and other tragic events, within days there is the news that the warning signs were present on social media. In the spring of 2022, the 18-year-old gunman who entered a Texas elementary school and slaughtered 19 children and two teachers had posted disturbing images on Instagram, while his TikTok profile warned, “Kids be scared.”

Earlier this year, a gunman who killed eight people at a Dallas-area outlet mall had also shared his extremist beliefs on social media.

As this reporter wrote last year, many have asked if warning signs were missed in past incidents.

William V. Pelfrey, Jr., Ph.D., professor in the Wilder School of Government and Public Affairs at Virginia Commonwealth University, responded at the time, “It is impossible to prevent people from making threats online.”

Pelfrey also said that social media organizations have a moral responsibility to identify and remove threatening messaging.

That is apparently what Truth Social, the social media company owned by former President Donald Trump did in March. It tipped off the FBI about threats made by Robertson, who was subsequently investigated by the FBI.

Earlier this year, a gunman who killed eight people at a Dallas-area outlet mall had also shared his extremist beliefs on social media.

As this reporter wrote last year, many have asked if warning signs were missed in past incidents.

William V. Pelfrey, Jr., Ph.D., professor in the Wilder School of Government and Public Affairs at Virginia Commonwealth University, responded at the time, “It is impossible to prevent people from making threats online.”

Pelfrey also said that social media organizations have a moral responsibility to identify and remove threatening messaging.

That is apparently what Truth Social, the social media company owned by former President Donald Trump did in March. It tipped off the FBI about threats made by Robertson, who was subsequently investigated by the FBI.

Truth Social Reacted

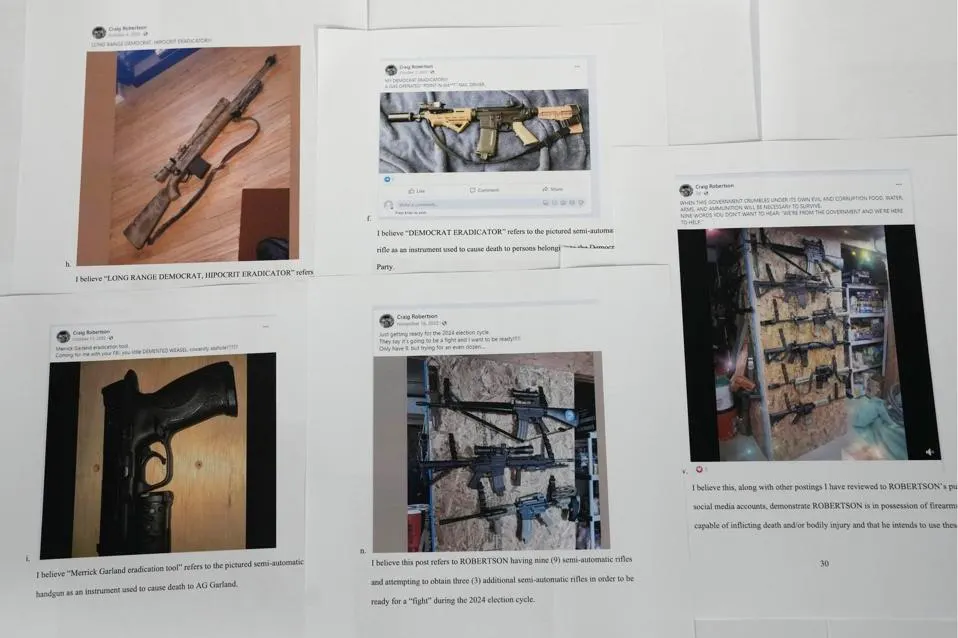

On March 19 an FBI agent received a notification from the FBI National Threat Operations Center regarding the threat to kill Bragg, after the threat center was alerted about Robertson’s posts by administrators at Truth Social. The FBI continued to investigate and found that Robertson posted similar threats against Vice President Kamala Harris, U.S. Attorney General Merrick Garland and New York Attorney General Letitia James.

Truth Social seems to have been very direct in sharing the information. That fact has been a surprise to many given the vitriol that the former president has directed at his critics. But even Trump likely would not have wanted to see his platform used in such a nefarious way.

“A platform partially owned by Trump that fostered an assassination that was successful would have blown back on Trump criminally,” suggested technology industry analyst Rob Enderle of the Enderle Group.

“And when it comes to an assassination, the rules tend to go out the window with the potential of the service being identified as a terrorist organization and aggressively mitigated—as in the executives go to jail and the service gets shut down,” added Enderle. “No social media platform wants to be on the wrong side of a presidential assassination, or any major event like 9/11 that could be of national importance because there is a better than even chance it wouldn’t survive the resulting event regardless of laws and existing protections.”

Truth Social Came Through Where Other Platforms Failed

It is notable too that a fairly new and much smaller platform was able to alert the FBI, while more established social media services have largely failed to see past warning signs. However, the number of users could be the issue, and there may simply be too many posts for a larger platform to monitor, especially from accounts with few followers.

“Size is a huge problem, for instance, in Bosnia a man just broadcast killing his ex-wife on Instagram and the platform didn’t see it until it had been widely seen,” said Enderle. “The industry is looking heavily at artificial intelligence (AI) to address their inability to scale to address problems like this before one of them causes a reaction that makes social media obsolete.”

That could usher in a new phase in the evolution of social media.

“It’s developed from a platform for friendship, sharing, and getting ‘real’ verifiable news and information to the unintended consequences of becoming a platform for bullying as well as fermenting and spreading misinformation, hate speech, fear-mongering, and giving license for people to say or do anything. All in the name of free speech,” added Susan Schreiner, senior analyst at C4 Trends.

“In this coming phase in the social media timeline we will also need to contend with Deep Fakes and other nefarious uses for technology,” Schreiner added.

Lack Of Accountability From The Platforms

There are guardrails for broadcast and even ratings for games and movies, yet, such protections are not present on social media.

“Why are social media platforms treated so differently,” pondered Schreiner. “What is the responsibility of social media platforms for events like that in Utah or being a platform for spreading antisemitic lies? Why are they significantly downsizing social moderation?”

The sense of decency and responsibility that gave birth to social media has essentially gone by the wayside and likely will get worse.

“This is not about legislating morality – but rather the discussion might start around flags, mechanisms, and guardrails related to identifying and deterring threats to individuals, local community institutions, and so on – for the greater good and safety of society,” Schreiner suggested.

A New Form Of Misinformation?

It is unclear now if technology could help, hinder or even confuse matters in monitoring for such dangerous content. On the one hand, AI could help track individuals who may post threats, but the same technology is being employed to create the aforementioned Deep Fakes and to share misinformation/disinformation.

One concern is whether individuals could employ AI to create misleading posts to essentially “frame” individuals or otherwise use the technology for iniquitous purposes.

“That’s true today, swatting is an example of people being put at high, and in some cases mortal, risk as a result of false information,” Enderle continued. “AI developers are already bringing to market products that can better identify Deep Fakes, but this is a weapons race where the creators of the tools have the advantage of the initiative. So this is likely to continue to be a problem.”